🧠 Introduction: One Paper, A Decade of Transformation

Over the last 10 years, AI has exploded—from smart assistants to self-driving cars. But behind this revolution, a handful of research papers laid the groundwork.One paper stands out above all: “Attention Is All You Need.” It introduced the Transformer architecture, and nothing in AI has been the same since. From ChatGPT to language translation and image generation, this single idea reshaped the field.

The Most Impactful AI Research Paper of the Decade Explained in Simple Terms

But what is it really about? Let’s break it down—no PhD required.

📄 What Is the Paper “Attention Is All You Need”?

Published in 2017 by researchers at Google, this paper introduced the Transformer model—a new way of building AI systems for understanding language.

Before this, most models relied on sequential processing, which was slow and inefficient. Transformers changed everything by using self-attention, enabling models to process words in parallel and focus on what matters most in a sentence.

⚙️ How the Transformer Model Works (Simplified)

The magic of the Transformer lies in one concept: attention.

Instead of processing words one-by-one like older models, Transformers:

-

👀 Look at the whole sentence at once

-

🧠 Assign importance (attention) to each word based on its role

-

🔁 Use layers to build deeper understanding

-

⚡ Work much faster and scale better

This is why large models like GPT-4 or BERT exist today—because they’re based on the Transformer architecture.

🔍 Key Concepts Introduced

Here are the core ideas the paper introduced:

-

Self-Attention Mechanism: Each word looks at other words to decide what to pay attention to.

-

Multi-Head Attention: Multiple attention mechanisms run in parallel to capture different relationships.

-

Positional Encoding: Since Transformers don’t read left-to-right, this helps the model know word order.

-

Layered Architecture: Multiple blocks stacked to learn deeper meanings.

📊 Impact Compared to Other Models (Table Overview)

| Feature | RNN/LSTM 🔄 | Transformer ⚡ |

|---|---|---|

| Processes Words In Order | Yes | No (Parallel) |

| Requires a Lot of Training Time | Yes | Less |

| Captures Long-Range Context | Limited | Excellent |

| Supports Large-Scale Models | Poor | Perfect Fit |

| Dominates State-of-the-Art | No | Yes |

🚀 Why This Paper Changed Everything

-

🧬 Foundation for GPT, BERT, T5, and more

-

🌐 Enabled massive improvements in translation, summarization, and search

-

🧩 Paved the way for multimodal AI (language + vision)

-

📈 Improved scalability, making billion-parameter models possible

-

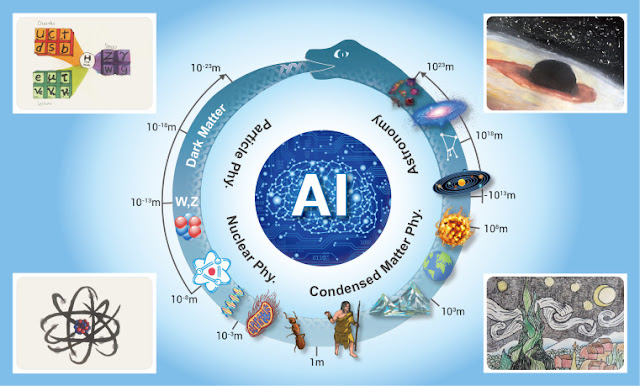

🧠 Inspired AI beyond language—used in vision, audio, biology, and robotics

This wasn’t just a paper. It was the birth certificate of the AI models we use every day.

💡 Real-World Applications of the Transformer Architecture

Here’s where you’ve seen its power without realizing it:

-

🗣️ Chatbots (like GPT models)

-

📚 Language translation apps

-

📷 AI image generators

-

🧬 Protein folding prediction tools

-

🔎 Smarter search engines

-

🎥 Automated video summarization

-

📄 Sentiment analysis in customer feedback

🧪 The Paper's Legacy in 2026 and Beyond

Fast forward to today, and this one research paper continues to influence every major AI innovation.

In fact, every time a new model is launched—whether it’s for text, audio, or images—it likely builds on the Transformer.

🧠 Long-Term Effects

-

🏗️ Changed how AI architectures are designed

-

💰 Led to massive funding in large language models

-

🌍 Democratized AI tools via open-source Transformer models

-

👥 Inspired a new generation of researchers and developers

✅ Conclusion: Why This Paper Still Matters Today

The most impactful AI paper of the last decade isn’t just a brilliant piece of research—it’s the foundation of modern artificial intelligence.

Its influence is everywhere, from your phone to your favorite AI assistant. And as models grow more capable, the legacy of “Attention Is All You Need” continues to evolve.

💡 One idea. One paper. A decade of disruption.

📝 Suggested Posts You May Like

👉 How GPT-4 Is Changing the Future of Blogging

👉 AI vs Machine Learning: A Simple Analogy to Understand the Difference

👉 How AI Is Helping Brands Create Viral Content Automatically

👉 The Ultimate Guide to Upskilling for an AI-Driven Workplace